In today’s digital age, the proliferation of deepfake technology poses a significant threat to cybersecurity. Deepfakes, powered by advanced artificial intelligence (AI) and machine learning (ML) algorithms, have the potential to deceive individuals, organizations, and even entire nations. These maliciously crafted audio and video forgeries can convincingly mimic real people, making it increasingly challenging to discern fact from fiction. As a result, the rise of deepfakes has given birth to a new cyber threat landscape that requires innovative defenses.

To safeguard our digital world from the peril of deepfake attacks, the cybersecurity community is turning to AI and ML solutions. These technologies, once primarily used by cybercriminals to create deepfakes, are now being harnessed to detect and combat them. In this article, we will delve into the cybersecurity challenge posed by deepfake attacks and explore the AI and ML solutions that are crucial for defending against this growing menace.

The Deepfake Challenge

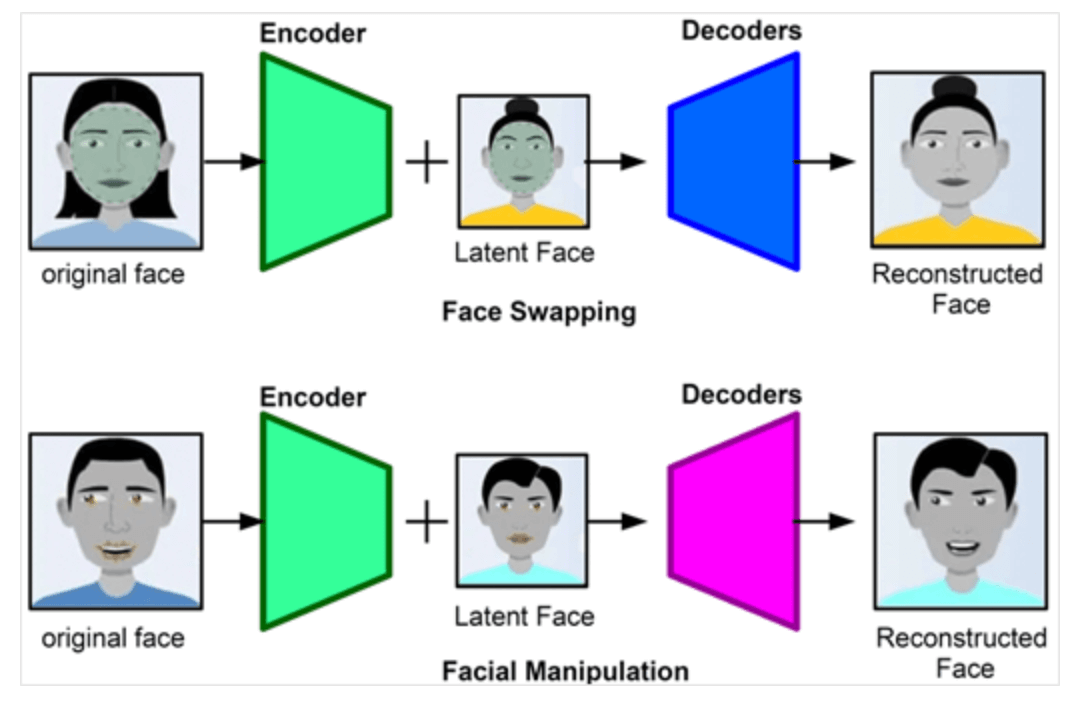

Deepfakes are fabricated media that convincingly impersonate individuals, often superimposing their likeness onto another person or manipulating their voice to say or do things they never actually did. These deceptive creations can be used for various malicious purposes, such as spreading disinformation, damaging reputations, or perpetrating fraud.

The primary cybersecurity challenge deepfakes pose is their potential to compromise trust. In a world where information is disseminated at an astonishing rate, individuals and organizations rely on the authenticity of digital media. Deepfakes can exploit this trust, causing confusion, doubt, and harm. The ramifications of deepfake attacks can be far-reaching, undermining the very foundation of cybersecurity, which is built upon the principles of authenticity and integrity.

AI and ML Solutions to Defend Against Deepfake Attacks

To combat the deepfake menace, cybersecurity experts are leveraging AI and ML technologies. These solutions provide both proactive and reactive approaches to protect against the creation and dissemination of deepfakes.

Deepfake Detection Algorithms

AI algorithms are trained to analyze audio and video content, looking for inconsistencies, artifacts, or anomalies that are characteristic of deepfakes.

ML models can detect subtle discrepancies in facial expressions, voice modulation, or other cues that may reveal a deepfake’s true nature.

Media Authenticity Verification

AI can be employed to create digital signatures or watermarks for media content to verify its authenticity. This can be used to ensure the integrity of important files and prevent tampering.

Blockchain technology, in conjunction with AI, can create immutable records of media content, making it difficult for malicious actors to alter or distribute deepfake content.

Real-time Monitoring

AI and ML can be used to continuously monitor social media and other online platforms for the presence of deepfake content.

Automated systems can flag potential deepfake content for further review by human analysts, helping to mitigate the spread of disinformation.

Training AI to Detect Deepfakes

To stay ahead of evolving deepfake technology, AI and ML models are trained on large datasets of known deepfakes, enabling them to recognize new, previously unseen variations. Ongoing training ensures that the AI remains up-to-date and can adapt to the ever-changing tactics employed by malicious actors.

Conclusion

The rise of deepfake attacks presents a formidable cybersecurity challenge. AI and ML solutions offer a ray of hope in this digital arms race. By leveraging these technologies to detect and combat deepfake threats, the cybersecurity community can strive to maintain trust and authenticity in our digital world. While deepfake technology continues to evolve, so too do the defenses against it, illustrating the importance of ongoing research and innovation in this critical field. In the battle against deepfakes, our best weapons are the very technologies that gave rise to the threat in the first place.

Author

Karthikeyan M

Karthikeyan is a seasoned Cybersecurity leader with 18 years of experience. He had managed projects from conception to delivery, with a strategic mindset to streamline processes and fortify organizations against cyber threats.